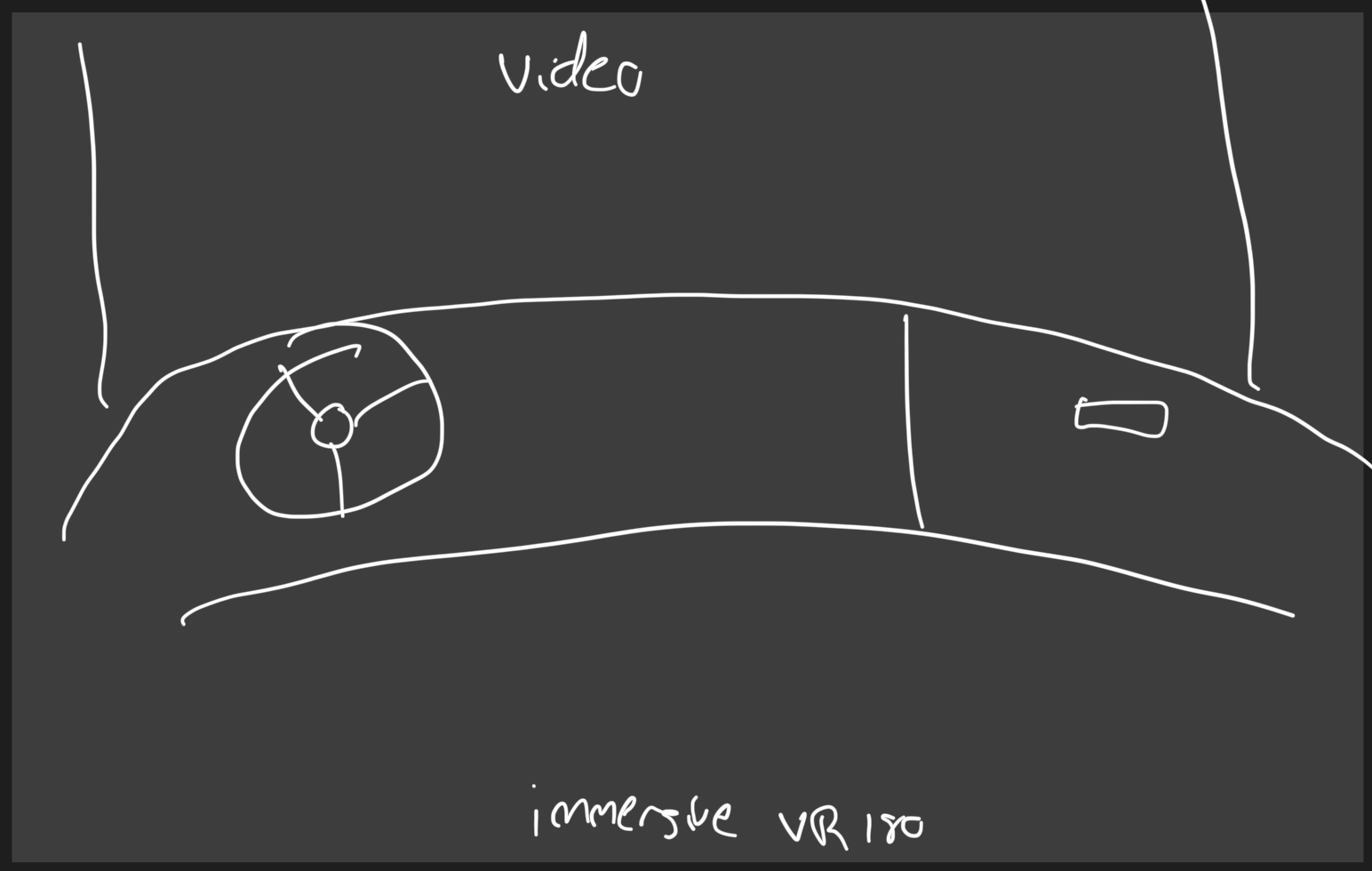

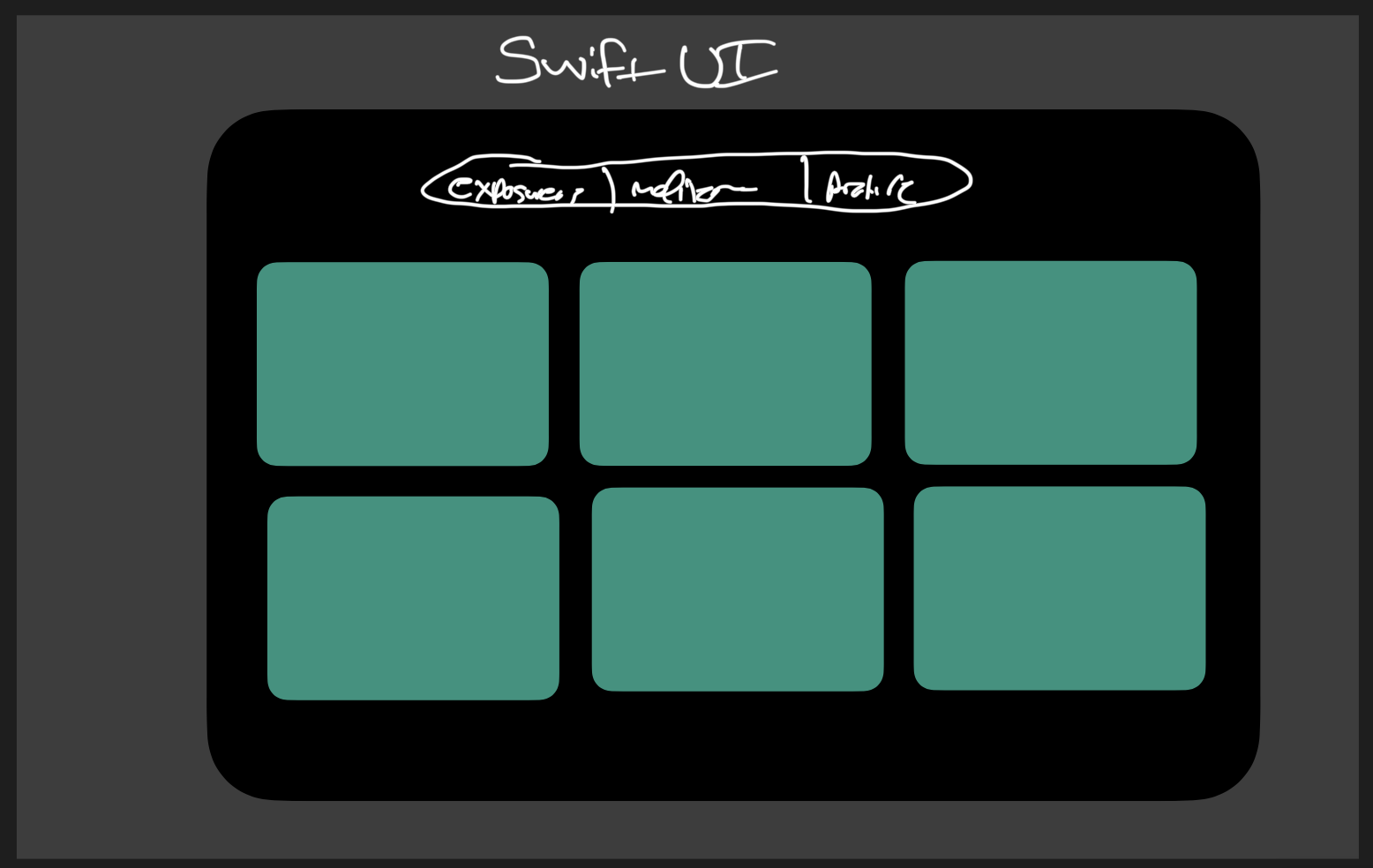

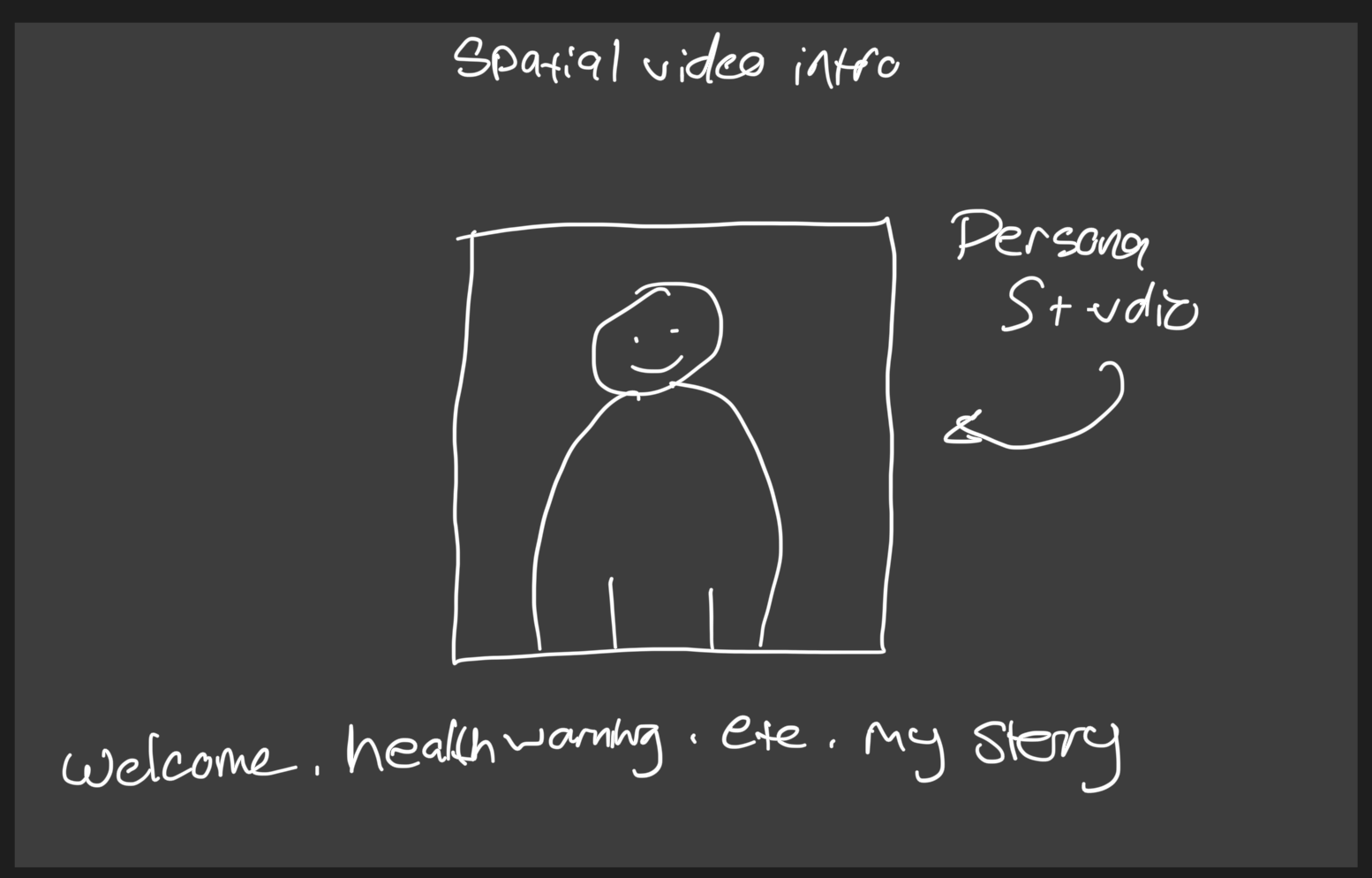

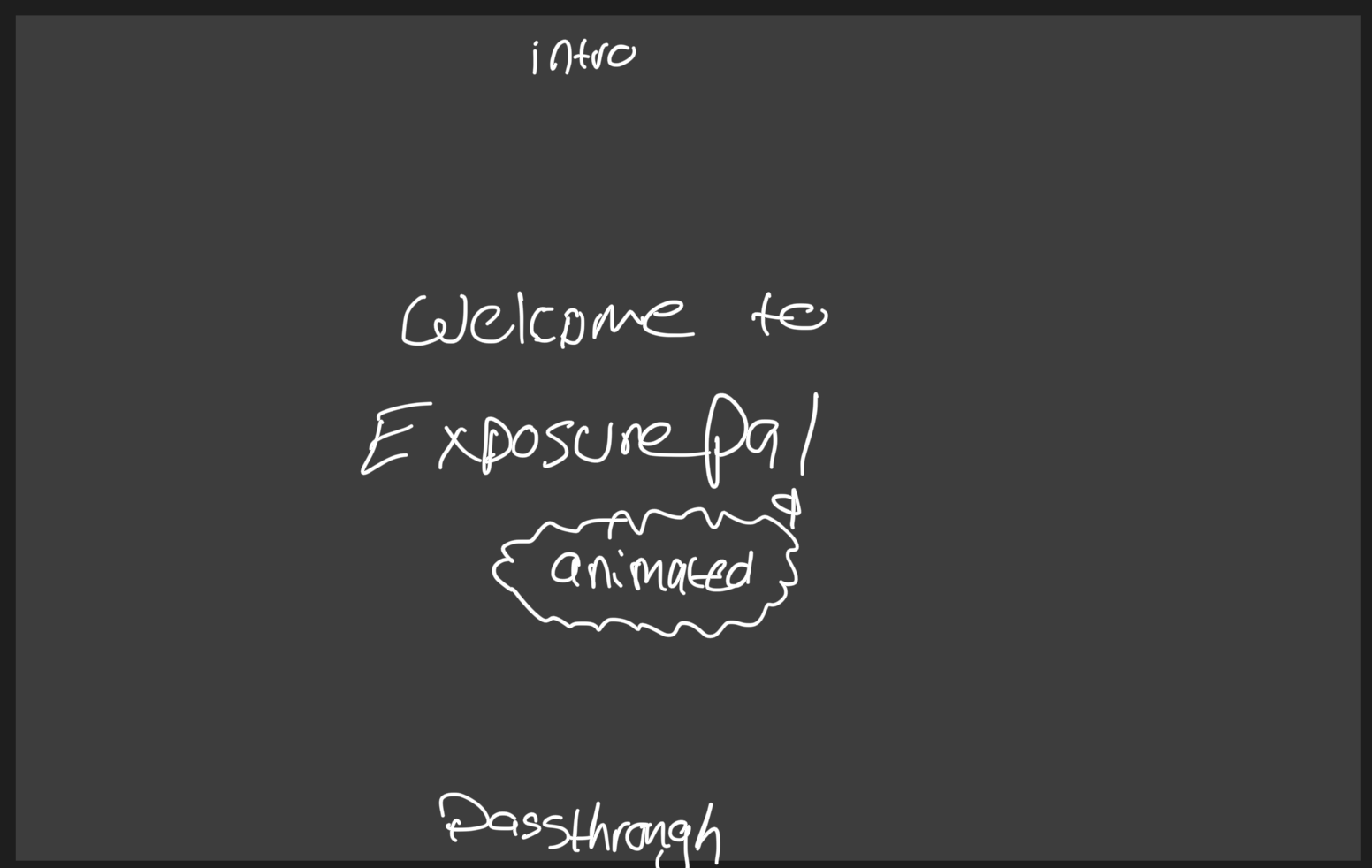

I’m working on getting back to creating VR180 content and have a more solid plan for an exposure therapy app. Storyboard:

The most challenging aspect, and why I’ve never quite done more than some test shots:

“Affordable” cameras kinda suck. That was taken on an Insta360 X3. I just got done with the Insta360 X4 at “8k” and can’t stand the sensor noise. You can clean up in post, Neat Video or Topaz AI, but even on a 7950X3D/4090 rig, 4 minutes of video takes 4+ hours to process as the video frame is so high res!

So screw it, I found a refurb/used Canon R5 C that is full frame 8k60p, but I’m NOT going with the Canon RF5.2mm F2.8 L Dual Fisheye, at least not yet as what’s the point of stereoscopy as human stereo vision breaks down quickly.

- Close Distances (up to 20 feet/6 meters): Stereoscopic vision is very effective. Depth perception is highly accurate.

- Intermediate Distances (20-100 feet/6-30 meters): Stereoscopic vision still functions well, but depth perception becomes less precise as distance increases.

- Far Distances (beyond 100-200 feet/30-60 meters): Stereoscopic vision begins to break down, and the brain relies more on other depth cues, such as size, perspective, and motion, to perceive depth.

vs.

- City Driving (25-35 mph / 40-55 km/h): Drivers usually look about 1-2 blocks ahead, which translates to roughly 200-400 feet (60-120 meters).

- Highway Driving (55-70 mph / 90-110 km/h): Drivers often look 12-15 seconds ahead, which at these speeds is approximately 1000-1500 feet (300-450 meters).

- Rural or Open Road Driving: In more open areas with higher speeds and fewer visual obstructions, drivers might look even further ahead, up to 20 seconds, which can be around 1500-2000 feet (450-600 meters) or more.

I can thank openpilot for understanding of that and years of people wanting dual cameras for “depth” when it’s moot, thanks… George Hotz, you prick, haha

I just think V180 is kinda a novelty, like, view VR180 adult content and notice you go crosseyed when the performer gets too close to the camera and generally, the chromatic aberration, etc.

You end up using less than half the full frame sensor per eye, which ends up being less than 4k per eye.

I want to do mono vr180 as we don’t need stereo outside the car as nothing will be stereoscopic outside of the car anyway due to how human eyes work. Like sure, the car inside will be 3D, but starring inside of a car isn’t usually good for motion sickness, even in real life and could be distracting.

This is a TTARTISAN 7.5mm F2.0 APS-C on a full frame, I think E-mount, the green lines are imaginary ASP-C sensor size.

Here’s the Canon EF 8-15mm f/4L Fisheye which can be adapted to the R5 C as well.

The only “issue” with the 7.5mm F2.0 is that it’s not exactly a circular 180 on the sensor and I’m gonna have to calibrate it to know exactly what the horz/vertical FOV is for metadata so it projects properly. It does seem to be the best for my use case as we retain left/right head movement and don’t quite need the full up/down head movement while in car as it would just be floorboard/roof of the car, maximizing sensor area for what we care about.

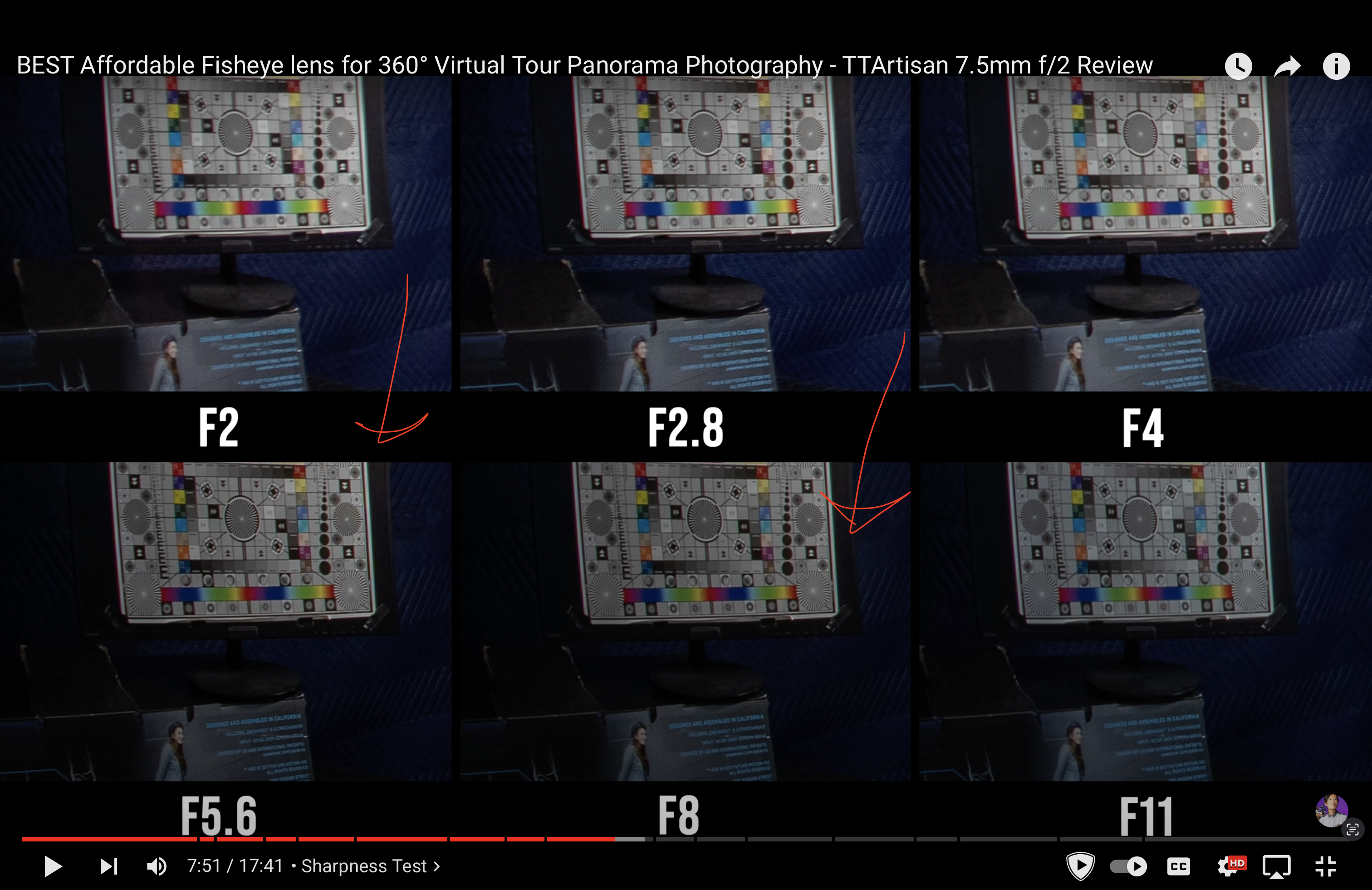

Camera lenses, stereo, VR180, etc is kind of a bitch to understand and think about, then you have aperture, ISO, focus, etc to contend to. Like f5.6 and f8 should be the sharpest for this lens:

It’s all going to boil down to ensuring I have the correct ffmpeg v360 fov mappings, but once it’s dialed in, it’s dialed in. It’s also handy the R5 C doesn’t have IBIS so we don’t have to worry about the sensor shifting and introducing judder/vibration into the image in car.

Still, 8k60p files are gonna be a pain to deal with just due to sheer size. Thankfully I can copy what the adult VR180 industry is doing and it seems they use h265 at 20Mbps for 8k stereo content, 8000×4000 dual eye.

General

Complete name : /Users/Downloads/SLR_SLR Labs_Apple Vision Pro 8k demo_4000p_42338_FISHEYE190.mp4

Format : MPEG-4

Format profile : Base Media

Codec ID : isom (isom/iso2/mp41)

File size : 225 MiB

Duration : 1 min 0 s

Overall bit rate mode : Variable

Overall bit rate : 31.4 Mb/s

Frame rate : 59.940 FPS

Writing application : Lavf61.0.100

Video

ID : 1

Format : HEVC

Format/Info : High Efficiency Video Coding

Format profile : Main@L6.1@High

Codec ID : hvc1

Codec ID/Info : High Efficiency Video Coding

Duration : 1 min 0 s

Bit rate : 31.2 Mb/s

Width : 8 000 pixels

Height : 4 000 pixels

Display aspect ratio : 2.000

Frame rate mode : Constant

Frame rate : 59.940 (60000/1001) FPS

Color space : YUV

Chroma subsampling : 4:2:0 (Type 0)

Bit depth : 8 bits

Scan type : Progressive

Bits/(Pixel*Frame) : 0.016

Stream size : 223 MiB (99%)

Writing library : x265 3.2.1+1-

I’ll be focusing on VR180 Mono so I can half the horz and do like 4096×2048 or something and do square equiangular so we don’t have black boarders in the output video.

Although many 360 videos are a minimum of 4K, content can often still look very blurry. I’ll explain why. When viewing 360 video content, the viewer is only seeing a small slice of the 360 footage at a given time within their field of view. This means that a 3840×1920 360 video is actually only displaying about 1280×720 in the viewing portal at a given time. This is why VR video content sometimes looks like television from the 1990s. For this reason, every pixel counts!

Anyway! My Vision Pro arrives today so I’ll get lost in that for a bit and see if it sucks, or is magical. I really don’t want to fuck with Quest 3, even though I own one as I loathe Android development and the AVP has the most magical feature of them all, high quality screens and foveated rendering so you can display higher quality videos than anything else right now. Let’s home Apple keeps the spatial computing going